For everyone.. just use your brain, instead of reading every reading on net..

All tested drivers have very similar frequency response..

Distribution of harmonics, THD and CSD are not so similar..

What makes sound differences?!

Well, I dismissed the Dayton immediately. The others I had a hard time with, resonance is maybe the culprit here. Personally, I have a hard time swallowing that something 45db under fundamental would be audible as in XRK's 95db example.

Peter

Experts should learn and understand what people prefer, not to force his own preference.

If you read the papers from Toole and Geddes instead of taking a single quote out of a much longer story, you'll learn that their experimentation has been aimed exactly at learning what people prefer, while excluding the (possibly off-beat) preferences of individuals including themselves.

Look at Klang+Ton measurements for 10F, B80 and TC9..

Talking about TC9, have anyone tried to find out why TC9 has been the least preferred driver in this round?

[1] Do you think that it is just coincident or random occurrence? Do you think if the test is repeated it may turn out to be the most preferred?

[2] Do you think it is the driver's characteristics that people didn't like, or just the side effect of imperfect recording? If so, which part of the imperfection?

[3] Regarding the real sound represented by TC9 in this test, which character of the sound do you think is causing the driver landed at the bottom of preference list?

If we can answer or understand that, we will have a better chance of making great speakers.

If you read the papers from Toole and Geddes instead of taking a single quote out of a much longer story, you'll learn that their experimentation has been aimed exactly at learning what people prefer, while excluding the (possibly off-beat) preferences of individuals including themselves.

Yes I have read them of course [and of course I cannot quote a much longer story, only a representative short one].

And I'm not disagreeing with you.

Talking about TC9, have anyone tried to find out why TC9 has been the least preferred driver in this round?

[1] Do you think that it is just coincident or random occurrence? Do you think if the test is repeated it may turn out to be the most preferred?

[2] Do you think it is the driver's characteristics that people didn't like, or just the side effect of imperfect recording? If so, which part of the imperfection?

[3] Regarding the real sound represented by TC9 in this test, which character of the sound do you think is causing the driver landed at the bottom of preference list?

If we can answer or understand that, we will have a better chance of making great speakers.

It's response is similar to the TG9 and 10F, but the latter both have a more even FR response. So I don't view it as the least preferred, but it suffered the same faith of the TG9 in an earlier test. Similar in sound but not the same.

Nothing to worry about if you're not thrown off by using a little EQ. But still one could have a preference for a cone material.

It's response is similar to the TG9 and 10F, but the latter both have a more even FR response. So I don't view it as the least preferred, but it suffered the same faith of the TG9 in an earlier test. Similar in sound but not the same.

When I was working in Thailand, local furniture technicians kindly described colour as: "Same, same, but not the same"

I bet that as a blind test, if I EQ'd the "all in the family" TG9, TC9, and 10F flat - we would all be hard pressed to distinguish the difference.

But as 5e pointed out, maybe recording them all playing at 90dB would provide the duress needed for the differences in non linear distortion to come through.

2 cases: one at low SPL will have tough time picking; and high SPL case will probably have differences in distinguishability.

But as 5e pointed out, maybe recording them all playing at 90dB would provide the duress needed for the differences in non linear distortion to come through.

2 cases: one at low SPL will have tough time picking; and high SPL case will probably have differences in distinguishability.

I knew someone would ask so I actually measured the average SPL and peak SPL with the calibrated mic while the Zoom H4 was recording. The SPL was set by clip 1 (as all 3 clips were on same track, I could not adjust volume between tracks).

All measurements were made at 0.50 m (19 inches) from the driver under test.

Barracuda had an average SPL of 89dB at 0.5m and peaks were about 92dB. The jazz track was down about 85dB average and 89dB peak, the pop vocal clip 3 was same as Barracuda at 89dB average and 92dB peak.

The 89dB average at 0.5m is about same 83dB at 1m - or a little under 2.83v for the typical 85dB sensitive driver.

All measurements were made at 0.50 m (19 inches) from the driver under test.

Barracuda had an average SPL of 89dB at 0.5m and peaks were about 92dB. The jazz track was down about 85dB average and 89dB peak, the pop vocal clip 3 was same as Barracuda at 89dB average and 92dB peak.

The 89dB average at 0.5m is about same 83dB at 1m - or a little under 2.83v for the typical 85dB sensitive driver.

>>> We have to learn from this driver test. People prefer 10F, B80 and even that bloody Dayton.

I agree with Jay.

We all know that frequency response is the dominant factor in making the "voice" of a driver pleasant, untolerable, or great. Assuming that the drivers are all fairly uniform and flat, there must be something else.

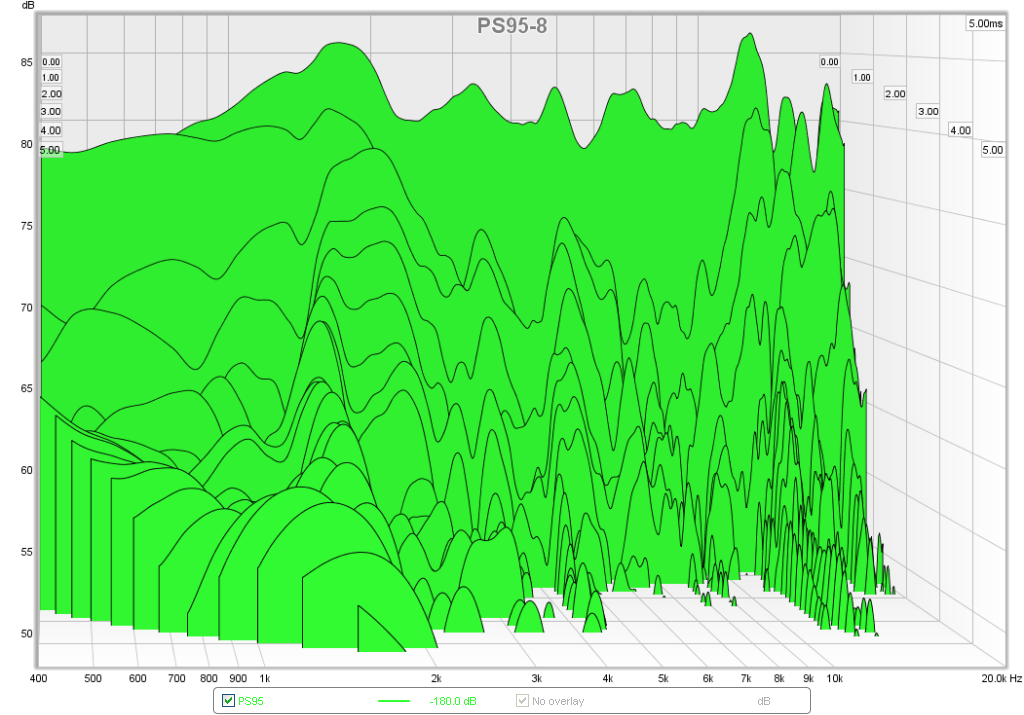

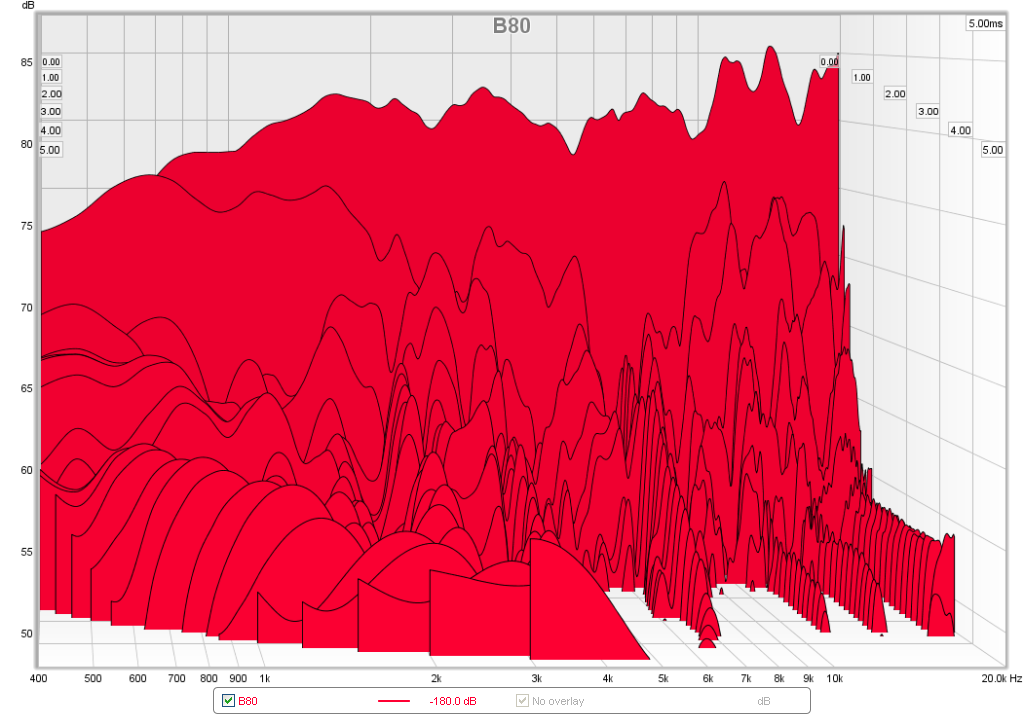

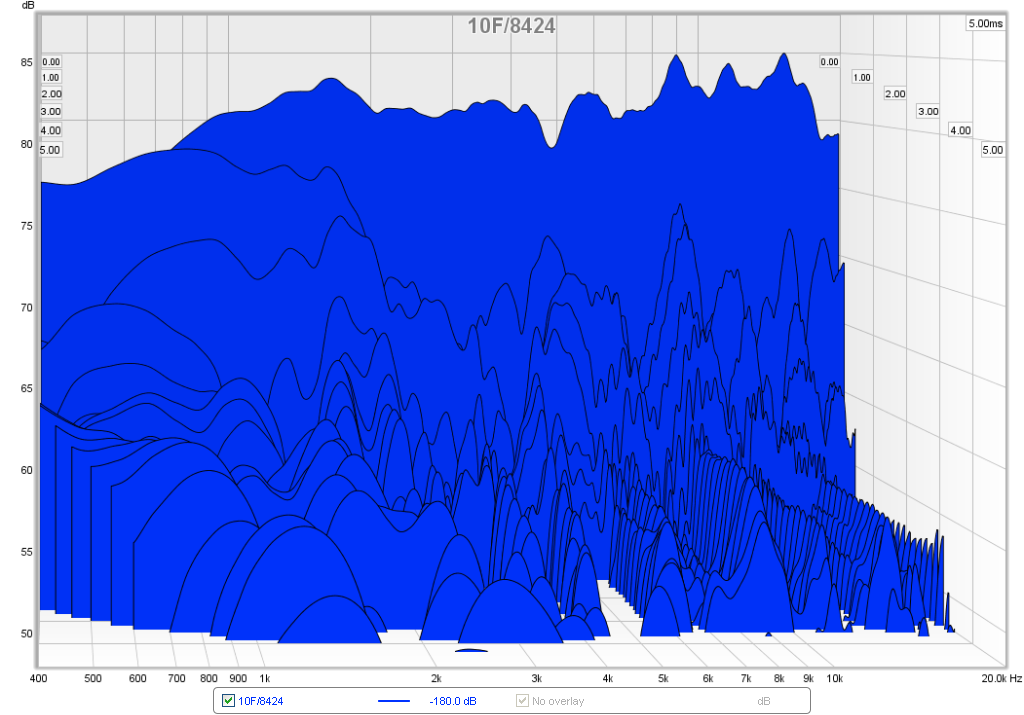

I do see that the CSD of the PS95 resembles the B80 to a certain extent. The TC9/TG9/10F all have a steep sheer wall, but then have residual energy storage and dissipation out in the 6k to 12k region seen as elongated furrows that extend out in time. I wonder if the similarities of the B80 and PS95 may have to do with what a phase plug does. There is no dustcap here to "ring" on and on, and those furrows are about the same as the resonant frequencies of a dustcap.

I have not measured the HD of the 10F or PS95 under duress yet - but suspect that at 89 to 92dB, the 10F sounds cleaner than the PS95 and that is why many people may have picked it over the PS95. Many people threw the PS95 out immediately - probably picked up on higher HD.

Finally, the sound of a paper cone may actually be preferred here for its naturalness. The TC9, although paper has a rather thick, almost plastic like coating on it. The coating on the B80 appears thinner and more fine. There is no coating on PS95.

Fiberglass, although able to render fluid like beautiful mids and vocals, sounds, as Jay characterized it, "un-natural" although he prefers it.

I think the un-coated paper cone of the PS95, combined with a phase plug that eliminates energy storage and decay associated with dust caps, may give the PS95 an edge on resolving finer detail. It is a measurable fact that the high frequency 20kHz is solidly reachable with the PS95 but is on its way down with the TC9/TG9/10F family. Same with the B80, it has real 20kHz ability.

Here is CSD of PS95:

Here is CSD of B80:

By comparison, here is CSD of 10F (and TG9 and TC9 are similar):

Careful now. There are a number of factors at play here. This is a tight group with similar on-axis frequency response, though not too similar (there are some 3db or more peaks and dips in even the best of them, TC9, 10F, if you look carefully. These would be very much audible). There is simply not enough data to provide a single reason as to why people preferred one driver over another. It is human nature to want to quickly assign a cause for an observations, but let's not jump to conclusions so soon.

If you want to find the reasons why drivers with similar frequency response could sound different, you need to first look at what's happening off-axis. Then look at linearity. For example, does the response change at 95db compared to 80 db? An excellent resource on quality measurements and how measurements should be done ideally is here:

SoundStageNetwork.com | SoundStage.com | SoundStageNetwork.com | SoundStage.com

Soundstage has a library of measurements for a number of loudspeakers. If you want some examples of stellar performance, look at the KEF Reference 201/2 and the Revel Ultima Salon2.

SoundStage! Measurements - Revel Ultima Salon2 Loudspeakers (12/2009)

SoundStage! Measurements - KEF Reference 201/2 Loudspeakers (12/2007)

- Look at how flat the response is through the entire frequency range. Note the range on the graph: 50 db.

- Look at how the off-axis response is a replica on the on-axis response.

- Look at the flatness and smoothness of the listening window response.

- Look at the linearity at different SPLs.

Of course, with digital EQ one can get even flatter on-axis response than these multi-thousand dollar speakers. But getting smoothness in the response, i.e, getting rid of the small pertubations in the response, and getting a uniform off-axis response requires careful design. If you look at the listening preference equation that Olive came up with, he weighted the flatness and smoothness of the on-axis response very high, followed by the off-axis response, and also gave a very high weight to the evenness and depth of the bass response. This is what people, i.e, you and I, prefer folks. This is what gives neutral, engaging, enjoyable, non-fatiguing, go-through-your-entire-record-collection sound.

If you want to find the reasons why drivers with similar frequency response could sound different, you need to first look at what's happening off-axis. Then look at linearity. For example, does the response change at 95db compared to 80 db? An excellent resource on quality measurements and how measurements should be done ideally is here:

SoundStageNetwork.com | SoundStage.com | SoundStageNetwork.com | SoundStage.com

Soundstage has a library of measurements for a number of loudspeakers. If you want some examples of stellar performance, look at the KEF Reference 201/2 and the Revel Ultima Salon2.

SoundStage! Measurements - Revel Ultima Salon2 Loudspeakers (12/2009)

SoundStage! Measurements - KEF Reference 201/2 Loudspeakers (12/2007)

- Look at how flat the response is through the entire frequency range. Note the range on the graph: 50 db.

- Look at how the off-axis response is a replica on the on-axis response.

- Look at the flatness and smoothness of the listening window response.

- Look at the linearity at different SPLs.

Of course, with digital EQ one can get even flatter on-axis response than these multi-thousand dollar speakers. But getting smoothness in the response, i.e, getting rid of the small pertubations in the response, and getting a uniform off-axis response requires careful design. If you look at the listening preference equation that Olive came up with, he weighted the flatness and smoothness of the on-axis response very high, followed by the off-axis response, and also gave a very high weight to the evenness and depth of the bass response. This is what people, i.e, you and I, prefer folks. This is what gives neutral, engaging, enjoyable, non-fatiguing, go-through-your-entire-record-collection sound.

Last edited:

The Tangband's that were tested had paper (bamboo) cones with pointy phase plugs and the Fostex had paper (banana?) cones with dustcaps. Neither faired well. I wonder what goes on behind the cone that produces a winner?

I have the Fostex 127e (similar spikes around 7kHz to their current models) and always thought the lightweight cone (and surround) made the driver sound excitable and bright. The lightweight cone helps provide high efficiency but I wonder if a thin coat of damping (maybe dammar) would calm things? Or maybe coating the cone is a bad idea in general and spending time/money on a better driver a better idea?

I love these comparison tests because now I can buy with more confidence. Even tho I rated the Dayton last, I like that it sounded a bit different than the SS/Vifa - which I already own. I'm looking forward to getting it onto a baffle.

I have the Fostex 127e (similar spikes around 7kHz to their current models) and always thought the lightweight cone (and surround) made the driver sound excitable and bright. The lightweight cone helps provide high efficiency but I wonder if a thin coat of damping (maybe dammar) would calm things? Or maybe coating the cone is a bad idea in general and spending time/money on a better driver a better idea?

I love these comparison tests because now I can buy with more confidence. Even tho I rated the Dayton last, I like that it sounded a bit different than the SS/Vifa - which I already own. I'm looking forward to getting it onto a baffle.

Regarding the CSD plots also, it is not only that the CSD profile is a little different, the decay of the 10F is broadly higher duration, particularly in comparison with the PS95, which only approaches the same decay time in the last octave, much of the mids and lower HF being at half or less the time.

Thus I think that the result has alot to do with listeners tolerances to CSD 'hangover' in different frequency bands. As most would agree, the telephone band is the most critical and this is where the PS95 excels.

Given that all the drivers have limited variance in THD (when you consider that 2dB variance of a -40dB harmonic is neither here nor there) and you have a winning combination of desirable attributes - low THD and very good CSD.

Also, many are accustomed to hearing increasing decay time at HF (in comparison to mids, where its probably more audible) and you've another reason.

For want of a real scientific term: The spectral mean decay time of the 10F is significantly higher.

This is what I would comparatively call 'smearing' (to coin a audiophool review speak term)

All in all, the most interesting subjective result AND objective measurement session so far.

Now I really want those PS95

Nice one X ��

Thus I think that the result has alot to do with listeners tolerances to CSD 'hangover' in different frequency bands. As most would agree, the telephone band is the most critical and this is where the PS95 excels.

Given that all the drivers have limited variance in THD (when you consider that 2dB variance of a -40dB harmonic is neither here nor there) and you have a winning combination of desirable attributes - low THD and very good CSD.

Also, many are accustomed to hearing increasing decay time at HF (in comparison to mids, where its probably more audible) and you've another reason.

For want of a real scientific term: The spectral mean decay time of the 10F is significantly higher.

This is what I would comparatively call 'smearing' (to coin a audiophool review speak term)

All in all, the most interesting subjective result AND objective measurement session so far.

Now I really want those PS95

Nice one X ��

Last edited:

We have to learn from this driver test. People prefer 10F, B80 and even that bloody Dayton. Do you think those ears do not matter? Experts should learn and understand what people prefer, not to force his own preference. If you know precisely that directivity is the key to great speaker, then you know precisely that you will get rich because everyone will purchase your speaker.

I don't necessarily agree that "we" can assert that, especially in light of the many comments about this round being quite hard to discern, and doubly in light of the terrible experimental design known as internet audio testing. Please, please, please do not take this as any sort of disparaging comment towards X's work (I admire his enthusiasm and productivity!), but we have to acknowledge the difficulties (and utter lack of control) of such comparisons and temper our extrapolations from these polls.

This is the same reason that epidemiological studies are so hard--and at least those use extremely large N's in order to (hopefully) average out individual variations. They're also largely used as preliminary data to develop new hypotheses that are tested with much more targeted studies.

E.g. what would you define as the best driver if one of the lowest ranking drivers in this comparisons was consistently everyone's second choice? Would that not tilt the table? What are the unaddressed (potential or real) biases to this data? Is it even large enough to "null" some of them? You *might* be able to when you lump drivers together.

Let's remember these are for fun and otherwise fall under the category of GIGO for making legitimate distinctions.

I wonder what is worse; a CSD that shows significant ringing (decay) at all frequencies, or one that shows less on average, but very frequency selective ringing? It would seem that relatively even wideband ringing might sound more natural, than significant ringing at only certain frequencies... (?)

If the mic and H4 are considered neutral enough, then one way to increase the subjective difference between the various drivers would be to make a recording, then play that recording through the speakers a second time, and record that. Maybe even do it a third time. I've read that this technique will show a big difference within 2 or 3 passes.

If the mic and H4 are considered neutral enough, then one way to increase the subjective difference between the various drivers would be to make a recording, then play that recording through the speakers a second time, and record that. Maybe even do it a third time. I've read that this technique will show a big difference within 2 or 3 passes.

Last edited:

I have the Fostex 127e (similar spikes around 7kHz to their current models) and always thought the lightweight cone (and surround) made the driver sound excitable and bright. The lightweight cone helps provide high efficiency but I wonder if a thin coat of damping (maybe dammar) would calm things? Or maybe coating the cone is a bad idea in general and spending time/money on a better driver a better idea?

We do know that treatment can get rid of most of that peak. There is a thread here that covers most of it (some further improvements since that thread slooowwwed).

dave

I wonder what is worse; a CSD that shows significant ringing (decay) at all frequencies, or one that shows less on average, but very frequency selective ringing? It would seem that relatively even wideband ringing might sound more natural, than significant ringing at only certain frequencies... (?)

If the mic and H4 are considered neutral enough, then one way to increase the subjective difference between the various drivers would be to make a recording, then play that recording through the speakers a second time, and record that. Maybe even do it a third time. I've read that this technique will show a big difference within 2 or 3 passes.

I share similar questions.

I instinctively feel broadband decay is indicative of smear and loss of detail, but is that apparent? Or does that manifest as laid back 'smooth' sound?

Id also instinctively feel that narrow higher Q peaks in CSD would be:

1) perhaps ignored by the ear after a period of acclimatization

2) be picked out by the careful listener as indicative of small narrow Q resonance

The latter point would seem to be borne out by the PS95 'Marmite' response from some, who clearly heard something they disliked strongly. BUT Conversely the lack of broadband smear caused opposing opinion by those (subconsciously or otherwise) ignoring the narrow spikes on decay time.

- Home

- Loudspeakers

- Full Range

- A Subjective Blind Comparison of 2in to 4in drivers - Round 4