Pries (in your own post) did not agree with this assesment and I agree with Pries. His study was much more complete. I do not know the conditions of the Holman claim. I'll stick with Pries and the 1 ms finding.

Hi Earl,

"...Preis states “the tolerances shown.... are not directly applicable to speech or music signals irradiated by loudspeakers in a reverberant environment. Most likely, the perceptual thresholds for these conditions would be at more than twice those shown”. Essentially, the data suggests that for high quality music or speech reproduction in a reverberant environment intra-channel phase distortion of 1 msec is inaudible to a trained listener. Notice that this threshold is a relatively conservative statement and is still two orders of magnitude greater than that for inter-channel phase distortion.....”

Once again, Preis talks about "intra-channel" NOT "inter-channel".

Inter-channel threshold stays it 20usec or even less.

Best Regards,

Bohdan

I see the difference now, I missed that before. I have no knowledge of inter-channel timing differences in multi-channel setups, except stereo, but I know hearing very well and some of the sources that you were quoting were not very reputable. In stereo 20 uSec would have virtually no effect. For stereo, see Blauert. Even a 2 ms shift in timing can be offset with a few dB of level increase.

We had some experience in trying to synthesize sounds of real objects because it was impossible to record every scenario. In many cases we had to shift the phase relationship of various components in order to accomplish a sound similar to the real thing. This is due to the fact that all sounds are familiarized by not only the frequency content, but also the phase and timing relationship of the content. Shifting the phase/timing relationship will make it sound different, and sometimes giving a compressed impression. I am sure that if you talk with people working in this area can give you a better feeling how phase effects replication of sound.

we certainly have the practical fact that LR XO are popular, pianos sound like pianos on playback thru these speakers

of course depending on micing, mixing pianos may sound like they are 1/2 the width of the room, in your lap or even sometimes like they are nicely distanced as on a stage...

...as I have experienced with the same system, same day - different recordings

of course depending on micing, mixing pianos may sound like they are 1/2 the width of the room, in your lap or even sometimes like they are nicely distanced as on a stage...

...as I have experienced with the same system, same day - different recordings

jcx,

I agree and I also have usually settled on that filter design. With a fourth order L/R filter and some simple time correction circuitry in an active crossover it should be fairly easy to get to a phase coherent and time coherent end product.

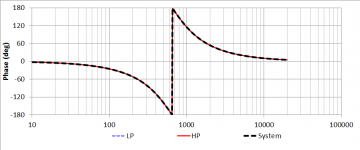

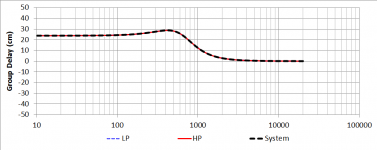

Phase coherent (as in: woofer's phase closely tracking tweeter's phase), yes.

Time coherent (as in: low frequencies arriving at the same time as high frequencies), no.

Marco

Attachments

Last edited:

I read a paper today by David Griesinger that might have changed the way I think about phase. He showed how the phase between the harmonics, not the fundamental, could be significant in audio perception. His argument was quite convincing and lucid. This is a different way of think about phase, and its really more group delay, than just looking at a phase plot of a system. You have to look at the excess group delay in a region center about 2 kHz, the phase of the fundamental does not matter and by 5 kHz there is no synchronous nueral activity to consider. But from about 1 kHz - 5 kHz, the group delay does matter. This is exactly what Lidia and I found as well. But we found that it also varried with SPL level and Griesinger does not discuss that aspect.

Earl,

This is what I have been trying to get at with the harmonic overlay with the fundamental. Though I am still far from understanding all the issues with the human ear and brain interpretations it only made intuitive sense to me. I have much reading to do. Perhaps there are still other aspects of hearing that we still do not understand that will widen the bandwidth of this process?

Marco,

The post that you put up showing the time misalignment is why I added the mention of a time correction in the crossover network. Aligning phase and time in the crossover should go hand in hand if you want a coherent waveform across the crossover point. Otherwise why care which order filter you pick, any L/R filter can create that situation? The time delay caused by the 360 degree phase shift of the L/R crossover should be something that we can fix, but then we have to look at the phase shift also introduced by the time correction filter itself.

This is what I have been trying to get at with the harmonic overlay with the fundamental. Though I am still far from understanding all the issues with the human ear and brain interpretations it only made intuitive sense to me. I have much reading to do. Perhaps there are still other aspects of hearing that we still do not understand that will widen the bandwidth of this process?

Marco,

The post that you put up showing the time misalignment is why I added the mention of a time correction in the crossover network. Aligning phase and time in the crossover should go hand in hand if you want a coherent waveform across the crossover point. Otherwise why care which order filter you pick, any L/R filter can create that situation? The time delay caused by the 360 degree phase shift of the L/R crossover should be something that we can fix, but then we have to look at the phase shift also introduced by the time correction filter itself.

http://www.diyaudio.com/forums/mult...rmonics-vocal-formant-range-630hz-4000hz.html

Is this the paper? Then Elias had this "covered" already

Rudolf

Is this the paper? Then Elias had this "covered" already

Rudolf

Hi Guys,

"....Tom Holman reports [10] that in his laboratory environment at the University of Southern California that is dominated by direct sound, a channel-to-channel time offset equal to one sample period at 48 kHz is audible. This equates to 20 μsec of inter-channel phase distortion across the entire audio band. Holman [10] also mentions, “one just noticeable difference in image shift between left and right ear inputs is 10 μsec....”.

But let’s relax this tight requirement to 20usec for the sake of an exercise below.

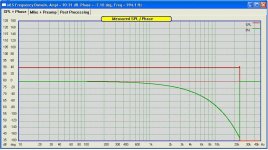

The 20us time difference translates into the phase across the audio bandwidth as shown on the attached figure. The green line is the maximum phase difference allowed between stereo loudspeakers.

So, for instance, at 1000Hz, the allowable phase difference is only 8deg. I do not know of any audio standard that enforces this tolerance, and this is the same issue that Watkinson was lamenting about – lack of decent stereo standard. The BAS people are also on the right track.

Anyway, the overall picture emerging from this short review of papers, is the importance of phase response in multi-channel loudspeaker system design. But not only this. The phase seems to plays vital role in maintaining transient response of a loudspeaker, for the purpose of providing undistorted waveform for correct, two-stage analysis by the ear – localization is one of them.

As a solution, I sign with both hands under the post #5795.

So what means of phase control are implemented in today’s loudspeaker?.

Best Regards,

Bohdan

"....Tom Holman reports [10] that in his laboratory environment at the University of Southern California that is dominated by direct sound, a channel-to-channel time offset equal to one sample period at 48 kHz is audible. This equates to 20 μsec of inter-channel phase distortion across the entire audio band. Holman [10] also mentions, “one just noticeable difference in image shift between left and right ear inputs is 10 μsec....”.

But let’s relax this tight requirement to 20usec for the sake of an exercise below.

The 20us time difference translates into the phase across the audio bandwidth as shown on the attached figure. The green line is the maximum phase difference allowed between stereo loudspeakers.

So, for instance, at 1000Hz, the allowable phase difference is only 8deg. I do not know of any audio standard that enforces this tolerance, and this is the same issue that Watkinson was lamenting about – lack of decent stereo standard. The BAS people are also on the right track.

Anyway, the overall picture emerging from this short review of papers, is the importance of phase response in multi-channel loudspeaker system design. But not only this. The phase seems to plays vital role in maintaining transient response of a loudspeaker, for the purpose of providing undistorted waveform for correct, two-stage analysis by the ear – localization is one of them.

As a solution, I sign with both hands under the post #5795.

So what means of phase control are implemented in today’s loudspeaker?.

Best Regards,

Bohdan

Attachments

http://www.diyaudio.com/forums/mult...rmonics-vocal-formant-range-630hz-4000hz.html

Is this the paper? Then Elias had this "covered" already

Rudolf

Yes he did and I thanked him in another thread, but thanks Elias. The funny part is that on most things Griesinger would completely disagree with Elias. The papers gave me complete support for what I have been saying - except for the phase in the midband. That was new to me, but I am willing to change my beliefs when I see a compelling reason to.

Correction - its the bundle of three papers further down the page - a must read set IMO. Griesinger is not someone who makes stuff up. He is high;ly respected in the room acoustics field. I'm glad that I agree with him, I hate to have to argue that he was wrong!!

Last edited:

Anyway, the overall picture emerging from this short review of papers, is the importance of phase response in multi-channel loudspeaker system design. But not only this. The phase seems to plays vital role in maintaining transient response of a loudspeaker, for the purpose of providing undistorted waveform for correct, two-stage analysis by the ear – localization is one of them.

Best Regards,

Bohdan

I have not found any of those papers compelling evidence of your claims. The only reputable one had nothing to do with the topic. Sorry, I'll stick with my previous opinion on this one.

20 us is an absurd notion for image degradation. That's only about 7 mm of lateral head movement. In that distance the image in my system does not change even a perceptible amount. Ten times that distance is still not a significant change. Thirty times is getting to be perceptible. That's about 1 ms, just about the number I agree is the correct one.

At any rate, I tire of talking about phase in a waveguide thread. Could this discussion be moved elsewhere?

Last edited:

I'll stick with my previous opinion on this one.

Hi Earl,

And I'll stick with mine.

Anyway, my job here is done. Thank to all of you for reading.

Best Regards,

Bohdan

Hi Guys,

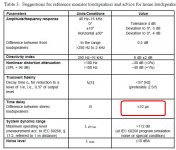

I am sorry for misleading you in my previous post. There is an AES document recommending <=10us time delay between stereo loudspeakers.

"AES TECHNICAL COUNCIL - Multichannel surround sound systems and operations.

This document was written by a subcommittee (writing group) of the AES Technical

Committee on Multichannel and Binaural Audio Technology. Contributions and comments were also made by members of the full committee and other international organizations.

Writing Group: Francis Rumsey (Chair); David Griesinger; Tomlinson Holman; Mick

Sawaguchi; Gerhard Steinke; Günther Theile; Toshio Wakatuki.

TECHNICALDOCUMENT

Document AESTD1001.1.01-10

http://www.aes.org/technical/documents/AESTD1001.pdf

Best Regards,

Bohdan

I am sorry for misleading you in my previous post. There is an AES document recommending <=10us time delay between stereo loudspeakers.

"AES TECHNICAL COUNCIL - Multichannel surround sound systems and operations.

This document was written by a subcommittee (writing group) of the AES Technical

Committee on Multichannel and Binaural Audio Technology. Contributions and comments were also made by members of the full committee and other international organizations.

Writing Group: Francis Rumsey (Chair); David Griesinger; Tomlinson Holman; Mick

Sawaguchi; Gerhard Steinke; Günther Theile; Toshio Wakatuki.

TECHNICALDOCUMENT

Document AESTD1001.1.01-10

http://www.aes.org/technical/documents/AESTD1001.pdf

Best Regards,

Bohdan

Attachments

Wonder if anyone wants to get back to measurement of HOMs

As I mentioned in this post:

http://www.diyaudio.com/forums/multi-way/103872-geddes-waveguides-573.html#post3395989

Perhaps the potential for a poor-man's Schlieren..

- Home

- Loudspeakers

- Multi-Way

- Geddes on Waveguides