I would largely agree, but it really depends upon your viewpoint. If you multiply two 32-bit floats, you already have the potential for 64-bit results. If you then multiply the resulting product by another 32-bit float, you've already reached the potential of exceeding 64-bit float registers. Whether anyone could ever hear the truncation noise in a 64-bit calculation is doubtful, but something is lost mathematically even if it probably isn't important musically. When considering division instead of multiplication, you go beyond 64-bit even faster.After re-reading a couple of rsdio's post I realize that I should address one point. I am simply stating that no resampling/anti-imaging algorithm that I have seen used currently even approaches the numerical limit of 64bit floats.

The standard practice is to truncate to a 'large' register (40-bit to 80-bit fixed, 32-bit or 64-bit float) and then dither down to 24-bit. I assume that I'll never be able to hear the truncation noise that occurred before the final dithering stage, but that doesn't mean it's safe to assume that nothing is using all bits in a 64-bit float calculation.

That's not nearly going to work. Are you maintaining the phase for the pass bands? What do you do at the transition?As an example of the brute force approach, take an entire piece of music as one mass FFT zero the bins past Fo and do an inverse FFT. That way you use all of the information no more no less. Or run a properly overlapped 2^24 point FFT filter over the data. In both cases a HUGE latency for any current computers (home style).

Unless your 'music' consists of nothing but sine waves at precise multiples of the sample rate, then your process as described above would result in a great deal of energy in those 'zeroed' bins. Your FFT-based filter will not have the abrupt frequency-domain response that you hope for.

By the way, the overlapped FFT would smear the time domain significantly.

There is no way to make a discontinuity in the frequency domain, not even if you use a giant FFT.

Last edited:

Who was the audio dealer, John something, wasn't it, Nelson? One of the first Levinson dealers, (at my suggestion, by the way).

It was Lee Terrell, who previously had worked for John Garland in San Jose,

and it was the opening of Audio Excellence in San Francisco. Garland was

a Levinson dealer, but Lee became a Threshold dealer.

It will work, and preserve the correct amplitude and phase of everything in the pass band.That's not nearly going to work. Are you maintaining the phase for the pass bands?

All music does.Unless your 'music' consists of nothing but sine waves...

e.g. A recording 500 seconds long consists of sine waves at multiples of 0.002Hz.

Quite a few years before, but the process was also quite a bit different. The idea was to take a data point from the original file, Fourier transform around it (on the order of 2048 points on either side), multiply the function by the inverse of the phase response of anti-aliasing and anti-imaging filters, then back transform it and place the "new" datapoint in a new file. This had to be performed on each point of the original file, so it was NOT something that could be done real-time. However, we didn't have to lose the bottom bit and we didn't have to do multiple digital filters; additionally, the user's CD player didn't need any fancy hardware, just the standard anti-imaging filter.

It sounds fairly involved but I think I understand what it offers. Did you provide for the different anti-imaging filters?

The strength of the HDCD system is that the key difficult components at each end have been worked out as a system. The composite effect of the filtering at each side (and dynamic range stuff as well) are all managed as a single system. While its not completely transparent it comes pretty close. The Pacific Microsonics seems to still be the best AtoD and DtoA solution for audio. Hopefully something soon will be on a par or better.

A large part of the limitations in current commercial recordings are the DAW's used and the marginal DSP processing used in many of them. Its really the same issue we fight here but in a digital domain where good enough really is not good enough.

I think today's best perceptual noise shapers can dither CD to match HDCD audible S/N without the subchannel info and companding - all you need is playback DAC linearity to match

hi res well beyond 14/44 is the only modern digital mastering practice worth talking about no one records at 44.1K

hi res well beyond 14/44 is the only modern digital mastering practice worth talking about no one records at 44.1K

Perhaps, but it will not eliminate everything above the pass band.It will work, and preserve the correct amplitude and phase of everything in the pass band.

How do you tune your fiddles so that they only produce these frequencies that are harmonically related to the sample rate?All music does.

e.g. A recording 500 seconds long consists of sine waves at multiples of 0.002Hz.

Each individual note of each instrument could be analyzed perfectly by an FFT, but only if the sample rate is an even multiple of the fundamental frequency. Don't forget that if the string is stretched then the pitch will change, and the sample rate will need to change in lock step. As soon as you play multiple notes you lose all ability to perfectly analyze the waveforms without artifacts.

The fact is that analog music contains frequencies which cannot be perfectly processed by the FFT. The results are good enough for certain processes, but not for creating a brick wall filter. If you generate a pure sine wave that is in perfect synchronization with (a whole multiple of) the sample rate, then the FFT will show one bin with a non-zero magnitude, and the rest will show the noise floor. But if that sine wave is not perfectly matched (as would happen with acoustic music, as opposed to purely synthetic) then you'll see magnitude in several bins, not just the one that matches the frequency of the tone. If you were to try and filter out this one tone by zeroing out all of the bins above a certain frequency, you'd still be left with those bins that show a magnitude at lower frequencies, and thus part of the original tone will remain when you perform the inverse FFT. Multiply this effect by the tens of thousands of frequencies in a musical performance from the real world, and you can see that parts of the frequencies above your desired cutoff will still exist after the IFFT.

These concepts are easier to explain with pictures instead of words. I recommend Ken Steiglitz' "A Digital Signal Processing Primer," Chapter 8: Discrete Fourier Transform and FFT, page 168: 8.9 "A serious problem." You'll see in Fig. 9.2 that a single, pure sine wave appears in all of the FFT frequency bins. You cannot remove that one sine wave by zeroing out only some of the bins, and if you zero all of them you have silence.

Don't get me wrong: The FFT is a very powerful set of math, and there are many things that can be done. It's just that you guys think it can do more than it really can. There are even some very interesting things you can do in the test and measurement realm where you generate test tones that are precise multiples of the sample rate, and thus fall perfectly within a single frequency bin after the FFT has been calculated. Lots of companies are taking advantage of this. But you still cannot create a discontinuity in the frequency domain or the time domain without artifacts. The ideal brick wall filter is a discontinuity in the frequency domain; the ideal square wave is a discontinuity in the time domain; both exist only on paper and not in the real world. Even those vintage analog synthesizers - of which I am the proud owner of several examples - produce what is only labeled on the front panel as a "square wave" but in reality are not ideal square waves by any stretch of the imagination.

Yes, exactly!...could be analyzed perfectly by an FFT, but only if the sample rate is an even multiple of the fundamental frequency.

Now think of the entire 500 second recording as a single cycle of a very complex waveform with a fundamental frequency of 0.002Hz. If it's sampled at 48Khz, then the sample rate is exactly 24000000 times the fundamental frequency, and your criterion is satisfied.

It doesn't matter if it's a recording of music, traffic noise or anything else. If you stick it in your CD player and hit the repeat button, you're going to hear something that repeats every 500 seconds. Therefore it has a fundamental frequency of 0.002Hz.

The ideal brick wall filter is a discontinuity in the frequency domain; the ideal square wave is a discontinuity in the time domain; both exist only on paper and not in the real world. .

I think that what Scott said was that the solution converges to a brick wall filter i.e. it is the limit at infinity of some sequence of approximations.

You're right that you cannot create an ideal square wave, but you can get as close as you want by using a generator with higher and higher slew rates.

Similarly, you cannot physically create a perfect brick wall filter but you can get as close as you want (i.e. by processing more and more samples - I think).

For example if you process 2^10=1024 samples you get an approximation of the brick wall filter. By processing 2^16 samples you get a better one, and so on.

It sounds fairly involved but I think I understand what it offers. Did you provide for the different anti-imaging filters?

No, the idea was to standardize the anti-imaging filter, then phase pre-correct for it at the recording/mastering end. It hung together as a system, and given the time (1985), if successful, the required anti-imaging filter could have ended up a de facto standard. All moot, of course- our management killed the project. It did give me a fun thing to do for a month, and I did end up having a delightful day with Dick Greiner (who was brought in to judge the feasibility and practicality- he liked it quite a bit, though was dubious about audibility), so it wasn't a total loss.

That makes some amount of sense, but it's not the whole picture. Have you actually tried implementing a brick wall filter using an FFT with a 24 million sample window? How did that work out for you?Now think of the entire 500 second recording as a single cycle of a very complex waveform with a fundamental frequency of 0.002Hz. If it's sampled at 48Khz, then the sample rate is exactly 24000000 times the fundamental frequency, and your criterion is satisfied.

My point is that nearly all frequencies in the original analog signal will not fall into just one frequency bin in the FFT, therefore you cannot entirely remove any of those frequencies by zeroing some of the bins. You end up with a reduced-amplitude version of the original frequency. That means your filter isn't an ideal brick wall. Check out the reference that I provided.

Increasing the size of the FFT does not reduce the phenomenon where arbitrary continuous frequencies fall into multiple discrete frequency bins. The typical solution attempted is to change the shape of the window function, but that is a tradeoff between reducing the amplitude of far out side bins while increasing the amplitude of adjacent bins. Windowing is entirely ineffective when your window includes the entire musical piece.

That's not nearly going to work. Are you maintaining the phase for the pass bands? What do you do at the transition?

Unless your 'music' consists of nothing but sine waves at precise multiples of the sample rate, then your process as described above would result in a great deal of energy in those 'zeroed' bins. Your FFT-based filter will not have the abrupt frequency-domain response that you hope for.

By the way, the overlapped FFT would smear the time domain significantly.

There is no way to make a discontinuity in the frequency domain, not even if you use a giant FFT.

Again I don't care about the phase, you keep assuming I'm not familiar with the theory. Overlapped FFT filtering is well known and can be shown to be exactly eqivalent to circular convolution. I don't want a "discontinuity", I want the smallest sharpest transition within my numerical resolution. BTW there is no "energy" between the bins. The FFT and the time domain data contain exactly the same information. There is no energy in zeroed bins (some extremely small numerical noise maybe). You need to review windowing if you think FFT's only work on music perfectly if the tones are exact bins.

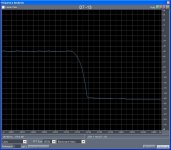

I don't have time to get Matlab up and demonstrate, but I remembered that Audition has a 24,000 point FFT filter. At 96k you can get a 30Hz transition at -108dB. This is only single precision float at 24000 point. I'm sure at 2^24 point double precision it will be <1Hz and approach 24bit noise.

Attachments

Last edited:

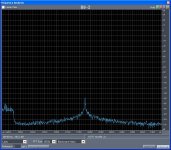

As for time smear in this meager example I did the very non-physical sudden zeroing of the time domain waveform. After about 120ms there is only the numerical noise. This is full scale noise over the entire 48kHz BW music has very little energy beyond 20KHz in any real world example.

Attachments

If you were to try and filter out this one tone by zeroing out all of the bins above a certain frequency, you'd still be left with those bins that show a magnitude at lower frequencies, and thus part of the original tone will remain when you perform the inverse FFT. Multiply this effect by the tens of thousands of frequencies in a musical performance from the real world, and you can see that parts of the frequencies above your desired cutoff will still exist after the IFFT.

No, again the sidelobes are a mathematical artifact they do not exist. Here I took a 27,777kHz sine wave at full scale 0dB. The same FFT filter, -156dB attenuation and notice the side lobes remain symetrical and the ones that intruded on the passband are gone. Trust me they were well above the -180db noise from the creation of the sine wave (single precision). I was surprised that the process actually removed some of the noise above transition.

Attachments

In fact I might add that no amount of filtering can work on the sidelobes, they are truely an artifact. I'm sure at least one of the programs on that site uses FFT based techniques. If you removed the restriction of real time processing I'm sure you could get one to do 192 to 44.1 with all noise and artifacts at the 24bit level.

Last edited:

Well, many people care about phase, including me. I really only objected to your claim that down-sampling can be 'perfect' ... if you relax the requirements, that hardly counts.Again I don't care about the phase, you keep assuming I'm not familiar with the theory.

I didn't say there was energy "between" the bins. I'm fully aware of the tradeoffs of windowing and how it works - I merely stated that windowing will not allow a discontinuity in the frequency domain.BTW there is no "energy" between the bins. The FFT and the time domain data contain exactly the same information. There is no energy in zeroed bins (some extremely small numerical noise maybe). You need to review windowing if you think FFT's only work on music perfectly if the tones are exact bins.

You attached a graph that shows pass band frequencies as high as 24.74 kHz... are you sampling at 50 kHz? You'll have aliasing with the low pass response in your graph if you're downsampling to 48 kHz, and certainly to 44.1 kHz.

Last edited:

They do not exist in the actual time domain data (depending upon your point of view and which DSP expert you argue with), but they certainly exist in the FFT frequency domain data. If you (*) try to create a brick wall filter by zeroing a range of bins in the frequency domain then these 'nonexistent' side lobes will certainly affect your time domain waveform via IFFT.No, again the sidelobes are a mathematical artifact they do not exist.

* EDIT: I recall that it was someone else in this thread who suggested zeroing FFT frequency bins to implement a brick wall.

Your attached graph makes no sense. I don't see that anything is 'gone' in that graph. Again, the pass band is too high for 48 kHz sampling, so I really don't see what you're trying to say here.Here I took a 27,777kHz sine wave at full scale 0dB. The same FFT filter, -156dB attenuation and notice the side lobes remain symetrical and the ones that intruded on the passband are gone. Trust me they were well above the -180db noise from the creation of the sine wave (single precision). I was surprised that the process actually removed some of the noise above transition.

Last edited:

- Status

- Not open for further replies.

- Home

- Member Areas

- The Lounge

- John Curl's Blowtorch preamplifier part II