It's expected the major load comes from the processing thread, but it may not hurt to confirm, e.g. https://unixhealthcheck.com/blog?id=465

These are the threads created by v2.0.0a3 while encountering the performance issue:It's expected the major load comes from the processing thread, but it may not hurt to confirm, e.g. https://unixhealthcheck.com/blog?id=465

Three threads are using CPU time. Two are clearly the capture and playback threads. The third - the one with the high CPU usage - isn't labelled but is presumably the processing thread.

I also ran the test on an rpi3b+ and didn't find a performance issue. It looks like it's specific to the combination Intel + Linux.

Thanks. You already used perf, maybe compiling --release with debug info https://nnethercote.github.io/perf-book/profiling.html#debug-info and trying to get more perf details about method calls, like in https://rust-lang.github.io/packed_simd/perf-guide/prof/linux.html would tell more.

Thanks. You already used perf, maybe compiling --release with debug info https://nnethercote.github.io/perf-book/profiling.html#debug-info and trying to get more perf details about method calls, like in https://rust-lang.github.io/packed_simd/perf-guide/prof/linux.html would tell more.

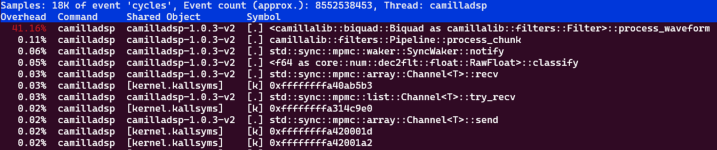

Here is some more information from perf showing the functions executed in thee processing thread. I haven't yet explored the extra debug options, but will do that. For these tests I've gone back to 1.0.3 as that lets me compare the two performance states by just changing the config.

First is 1.0.3 from github (default compiler settings) with the Gain filter - i.e. good performance:

Next is the exact same binary without the Gain filter - i.e. bad performance

The two look very similar except:

- Gain:

- The number of "event cycles" goes up. I assume this reflects the overall runtime being longer

- The percentage of time spent in Biquad:

I'm not sure what to make of this, other than the exact same code is running slower for no apparent reason. The "perf stat" results I posted earlier are for these same scenarios - so the slowdown seems to be due to reduced IPC but there's no obvious reason for that. Biquad:

Finally for comparison here's v1.0.3 compiled with target-cpu=x86-64-v2 without the Gain filter (i.e. the same config as test 2)

The performance is pretty much the same as the test 1, so it's good to see the problem has gone away, but again there's no obvious reason why.

I've also opened a thread over on the Rust forum to see if anyone can explain this (https://users.rust-lang.org/t/unexplained-order-of-magnitude-drop-in-performance/100406). One suggestion was it might be related to spectre/meltdown mitigations which was my thought too. It looks like "target-cpu=x86-64-v2" will avoid the problem but I'm still curious what is going on.

Attachments

@spfenwick

ipsum lorem smiley....

smiley....

ps. The post is editable for 30 minutes from posting it, after 30 minutes the edit button disappears, except for post #1 which can be edited indefinitely by the thread starter.

Sorry for the off-topic, cheers.

ipsum lorem

test test.. this is an 'inline-code' marked text to avoid :P becoming a ps. The post is editable for 30 minutes from posting it, after 30 minutes the edit button disappears, except for post #1 which can be edited indefinitely by the thread starter.

Sorry for the off-topic, cheers.

Last edited:

Thanks. I didn't realise my post was being "smilied" when I wrote it.Sorry for the off-topic, cheers.

@spfenwick

I forgot to mention one can press the 'Preview' button to the right in the post editing window (desktop PC browser) to see how the post comes out before posting it, eventual smileys and other artifacts will show up.

I forgot to mention one can press the 'Preview' button to the right in the post editing window (desktop PC browser) to see how the post comes out before posting it, eventual smileys and other artifacts will show up.

@spfenwick : did you do the tests above on linux, or on the virtualized VSL2?

I assume rather than VSL2 you mean WSL2. The tests above were on Linux, but the same issues show up on WSL2 as well.@spfenwick : did you do the tests above on linux, or on the virtualized VSL2?

The thread over at the Rust forum (https://users.rust-lang.org/t/unexplained-order-of-magnitude-drop-in-performance/100406).may be starting to shed some light. The issue seems to be related to denormal floating point numbers, which have a significant performance penalty on Intel but not AMD. Setting some flags in the x86 MSR to treat denormal numbers as zero makes the problem go away. That does affect the semantics of floating point numbers so it may not be an appropriate solution to use everywhere, especially as CamillaDSP uses FFTs for convolutions.

I'm not sure that explains everything - especially why the problem is Linux-only or why it doesn't occur with "target-cpu=x86-64-v2" - but at least it's a start.

Amazing catch, hats off! That discussion on rust forum shows that rust devs are truly low-level CPU connoisseurs. Actually I have never heard of denormals, feeling like a freshman

This article https://www.earlevel.com/main/2019/04/19/floating-point-denormals/ suggests to add tiny alternating positive and negative DC offsets to the DSP buffer to avoid the denormals. But I sort of do not understand how a tiny offset removes the denormals when the stream are negative and positive numbers. Perhaps because the chances of a negative value hitting the denormals range after adding the positive DC shift are much lower than processing a whole stream of samples close to zero where basically all values in the stream fall into the denormals?

This article https://www.earlevel.com/main/2019/04/19/floating-point-denormals/ suggests to add tiny alternating positive and negative DC offsets to the DSP buffer to avoid the denormals. But I sort of do not understand how a tiny offset removes the denormals when the stream are negative and positive numbers. Perhaps because the chances of a negative value hitting the denormals range after adding the positive DC shift are much lower than processing a whole stream of samples close to zero where basically all values in the stream fall into the denormals?

It took me back to studying Computer Science at university too many years ago. I don't think I've come across them since then.Actually I have never heard of denormals, feeling like a freshman

Oh it's denormals! I did not expect those to pop up while processing a non-zero signal.

What it does now is to flush any stored denormals after each chunk:

That solved an issue where the cpu load increased a few seconds after the signal goes quiet.

But apparently these nasty things can show up during the biquad calculations, without ending up in s1 or s2. This will need some investigation...

What it does now is to flush any stored denormals after each chunk:

Code:

/// Flush stored subnormal numbers to zero.

fn flush_subnormals(&mut self) {

if self.s1.is_subnormal() {

trace!("Biquad filter '{}', flushing subnormal s1", self.name);

self.s1 = 0.0;

}

if self.s2.is_subnormal() {

trace!("Biquad filter '{}', flushing subnormal s2", self.name);

self.s2 = 0.0;

}

}But apparently these nasty things can show up during the biquad calculations, without ending up in s1 or s2. This will need some investigation...

I implemented a biquad in Python to make it easier to track the values. It doesn't help, I can't explain how there can be denormals while a sine is playing.

This is the output, and the internal variables while processing a 1 kHz sine at 48 kHs sampling rate. The filter is the same Peaking filter as @spfenwick used in the example.

Blue is the signal, green and orange are the s1 and s2 state variables, all shown on a dB scale. While the sine wave is playing, they all stay >-150 dB which is well within what can be represented with normal numbers (with a margin of over 1000 dB!).

Then the signal ends at 3 seconds, and the variables all start dropping until they end are low enough to need denormals, some seconds after the plot ends. That is the problem that was solved by flushing denormals after each chunk.

This is as far as I have gotten today. To be continued..

This is the output, and the internal variables while processing a 1 kHz sine at 48 kHs sampling rate. The filter is the same Peaking filter as @spfenwick used in the example.

Blue is the signal, green and orange are the s1 and s2 state variables, all shown on a dB scale. While the sine wave is playing, they all stay >-150 dB which is well within what can be represented with normal numbers (with a margin of over 1000 dB!).

Then the signal ends at 3 seconds, and the variables all start dropping until they end are low enough to need denormals, some seconds after the plot ends. That is the problem that was solved by flushing denormals after each chunk.

This is as far as I have gotten today. To be continued..

I'm having trouble understanding it too. Even without the analysis you've done it seems intuitively that any audio signal would be way above the level of double precision denormals. Aside from the decay after the signal ends, a sample randomly falling below 10^-308 wouldn't be expected within the lifetime of the universe.

And even if denormals are somehow happening, the Gain of 0dB is just a multiply by 1.0, so should make no difference.

Let me know if there are any tests you'd like me to run on an Intel CPU.

And even if denormals are somehow happening, the Gain of 0dB is just a multiply by 1.0, so should make no difference.

Let me know if there are any tests you'd like me to run on an Intel CPU.

An occasional denormal doesn't cause any trouble, just a temporary slowdown for one or a few math operations. It's not a problem until there are lots of them.Aside from the decay after the signal ends, a sample randomly falling below 10^-308 wouldn't be expected within the lifetime of the universe.

Will do! But at the moment I have no idea what to try next 🤔Let me know if there are any tests you'd like me to run on an Intel CPU.

Maybe to an offer a suggestion, wrong as it may be, just to see if it ignites any spark when troubleshooting:

Could it be that it’s not the filter that causes the denormals, but that it’s already so in the input thread, spuriously or not?

Did not yet take the time to do a code run-through.

Could it be that it’s not the filter that causes the denormals, but that it’s already so in the input thread, spuriously or not?

Did not yet take the time to do a code run-through.

- Home

- Source & Line

- PC Based

- CamillaDSP - Cross-platform IIR and FIR engine for crossovers, room correction etc.